Digital South Yorkshire:

High Performance Data Centre for South Yorkshire

Colin Smythe

25th August 2004

2006 © Dunelm Services Limited

Table of Contents

This report is an initial investigation into the suitability

of providing a High Performance Data Centre (HPDC) within South Yorkshire. A

high performance data centre is defined as the provision of a virtual service

that combines the traditional features of a data centre with the high

performance computing capabilities of Grid. In the IT literature, such a

facility is also termed ‘The New Data Centre’. This report is produced under

contract to Digital South Yorkshire. This work was commissioned as a response

to a request from Tony Newson of Objective 1. This report should be used to

set the groundwork for a series of further investigations into the provision of

a high performance data centre that combines the usage of Grid technology with

the broader service capabilities of a data-centre.

The virtual HPDC consists of three core physical sites, one

primary and two secondary. These three sites are linked using a very

high-speed network (1-10Gbps). Each site has a full service capability and has

data storage replication of the other sites. The difference between the

primary and secondary sites is in the available Grid processing power and that

only the primary site has resident support staff. Access to the service is

supported through an Internet portal linked to a broad range of

telecommunications suppliers and a mix of private wire/wireless access

networks. The wireless access network should be made available throughout

South Yorkshire to provide anywhere, anytime access to the HPDC virtual

service.

The services that can be supported through the HPDC include:

- System simulation, modelling and data visualization – application domains include aerospace and mechanical engineering, biological systems modelling, etc;

- Data mining and archiving – the ability to search and analyse the data sets within the data centre;

- Digital rights management capable digital repository – the provision of services that enable organizations to use state-of-the-art digital rights management to control how their material may be accessed and used;

- Generic e-commerce managed service – this generic service should enable an organization to have its material made available for electronic download using a range of standard e-commerce services;

- Application service provision – the provision of applications through a remote service façade. This includes the provision of Learning Management Systems to support e-learning.

The sectors that would benefit from the provision of the

HPDC within South Yorkshire are a reflection of the four key cluster-groups,

namely: Creative & Digital Industries, Bioscience, Environmental &

Energy Technologies, and Advanced Manufacturing & Metals. There is

considerable synergy between the South Yorkshire HDLC and the proposed

Knowledge & learning Institute (KLI), the Creative Content Hub (CCH), the

Design Centre, the White Rose Consortium Grid, the South Yorkshire e-Learning

Programme (SYeLP), the Software Factory at the University of Sheffield and

eCampus. eCampus would be a strong contender as the primary host site.

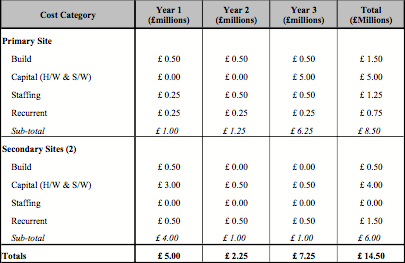

It is predicted that the cost of provision of the South

Yorkshire HPDC over the first three years are at least £14.5million with a more

realistic cost of £16-18million. It is projected that matched money and

initial service support revenues during the same period would be in excess of

£6million. It is also projected that the initial service could be made

available before the end of 2005 with the full service available in September

2006, at the earliest. A more realistic full service provision date would be

September 2007.

The further work that needs to be undertaken as soon as

possible includes:

- An investigation into the benefits that would accrue due to the creation of the HPDC;

- An evaluation of the risks involved in the creation of the HPDC;

- An analysis of the size of the potential market for the HPDC services in terms of type and range of clients;

- The definition of the services that are to be supplied by the HPDC;

- The development of the commercial model that will be required to sustain the HPDC.

One way forward is to establish a South Yorkshire High

Performance Data Centre (SY-HPDC) Pilot. The Pilot should run for at least 12

months. It is recommended that the aim of the Pilot is to investigate the

business models that are required to establish commercial sustainability and

that funding in the region of £1,250,000-£1,000,000 is made available.

1.1

Historic Perspective

1.2

Scope & Context

This report is produced under contract to Digital South

Yorkshire. This work was commissioned as a response to a request from Tony

Newson of Objective 1. This report should be used to set the groundwork for a

series of further investigations into the provision of a high performance data

centre that combines the usage of Grid technology with the broader service

capabilities of a data-centre. It is not the intention of this report to make

any definitive statement on:

a) The

commercial justification for the provision of a high performance data-centre;

b) The final

nature of the architecture or the range of services that could be made

available;

c) The

set of technologies that should be used for the services.

1.3

Structure of this Document

The rest of this document consists of:

|

2. Data Centres

& GRID Technology

|

Background material that explains the key facts of data centres,

Grid technology and service architectures;

|

|

3. A South Yorkshire

High Performance Data Centre

|

The description of the proposed solution. This includes a

description of the proposed service provision, capabilities and usage, target

user communities and its physical realisation, and the technological and

commercial implications of establishing such a centre;

|

|

4. A Way Forward

|

A proposal for the way in which the South Yorkshire High

Performance Data Centre can be realised in terms of partners, delivery

timeline, outline costs and identification of urgent further work

particularly in the establishment of a business feasibility evaluation pilot;

|

|

References

|

The reference material that is cited throughout the

report.

|

1.4

Nomenclature and Acronyms

ASIC Application

Specific Integrated Circuit

ASP Application

Service Provider

CCH Creative

Content Hub

DCML Data

Centre Mark-up Language

DfES Department

for Education & Skills

DWP Department

for Works & Pensions

EPSRC Engineering

& Physical Sciences Research Council

GGF Global

Grid Forum

GRAM Globus

Resource Allocation Manager

HPC High

Performance Computing

HPDC High

Performance Data Centre

ICT Information

& Communications Technology

IETF Internet

Engineering Task Force

IT Information

Technology

ISP Internet

Service Providers

KLI Knowledge

& Learning Institute

LMS Learning

Management Service

OSGA Open

Grid Services Architecture

OST Office

for Science & Technology

PPARC Particle

Physics & Astronomy Research Council

SIG Special

Interest Group

SoA Service-oriented

Architecture

SOAP Simple

Object Access Protocol

SRB Storage

Resource Broker

SYeLP South

Yorkshire e-Learning Programme

VPN Virtual

Private Network

XML eXtensible

Mark-up Language

2.1

Services & Service-oriented Architectures

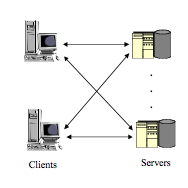

A Service-oriented Architecture (SoA) is an infrastructure

that is designed to deliver managed services to a user community. In its

latest incarnation a SoA is based upon web-services with access to the service

supported through a web browser. However, during the past twenty years there

have been several other versions of SoAs e.g. Application Service Provider

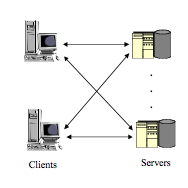

(ASP). A typical SoA is shown in Figure 2.1.

The basic concept is that a service is supplied by remote

server(s) to the clients. Both ‘thin’ and ‘thick’ clients are used depending

on the preference at the time; the thickness of the client depends on the

amount of local processing capability and intelligence required to augment the

remote service – a thicker client has more local processing capability. The

ultimate ‘thin client’ is nothing more than a web browser. The advantage of

the ‘thin-client’ approach is that new services can be easily added without

requiring changes to the client but these tend to have high communications

overhead.

|

|

|

|

Figure 2.1 Service-oriented architectures.

|

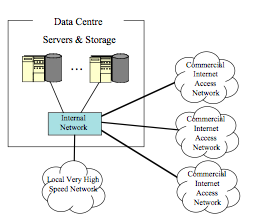

Figure 2.2 Data-centre services.

|

2.2

Data Centres

Data centres are used as the central feature for services

such as Data Warehousing, Internet Service Provision, etc. Figure 2.2 gives a

schematic representation of a data centre, the core features of which are:

- A substantial data storage capability. This storage should also support intelligent search and retrieval capabilities;

- Access to a wide range of commercially available network suppliers. This enables the data centre to be accessed directly from a wide range of telecommunication service suppliers;

- Housing for a large number of servers that are supplied by clients of the data centre. These clients use the data centre to reliably house their servers.

- Table 2.1 contains a summary of the types of services that are usually available through a data centre.

Table 2.1 Classification of the data-centre services.

|

Application

Classification

|

Explanation

|

Examples

|

|

Application-centric

|

Making network-savy applications available. This is

particularly useful for expensive applications that can be made available

through service based tariffing.

|

eCommerce provision.

|

|

Storage-centric

|

The provision of extensive, reliable long-term data

storage. This includes archival media as well as disk-based.

|

Data archiving, data mining, digital repository etc. The

Data Centre Mark-up Language (DCML) could be used.

|

|

Access-centric

|

Provision of access to the Internet through as broad a set

of telecommunication suppliers as possible.

|

Internet Service Providers.

|

2.3

GRID Technology

IBM define the GRID as:

“Grid

computing enables the virtualization of distributed computing and data

resources such as processing, network bandwidth and storage capacity to create

a single system image, granting users and applications seamless access to vast

IT capabilities. Just as an Internet user views a unified instance of content

via the Web, a grid user essentially sees a single, large virtual computer.

At its core,

grid computing is based on an open set of standards and protocols e.g. Open

Grid Services Architecture (OGSA), which enable communication across

heterogeneous, geographically dispersed environments. With grid computing,

organizations can optimize computing and data resources, pool them for large

capacity workloads, share them across networks and enable collaboration.”

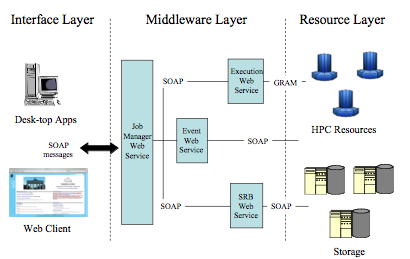

Figure 2.3 The Grid three-layered architecture.

The key points to note from Figure 2.3 are:

- Access to the full service is either through the web client for the service or the appropriate service specific desk-top application. These two approaches hide the physical nature of the high performance computing resource or the data centre storage;

- Access to the services is through a Web Services interface i.e. between the Interface and Middleware layers. This interface is realized as a series of SOAP messages that are used to carry XML data;

- Access to the Grid computing resource is via the Globus Resource Allocation Manager (GRAM). The Globus GRID toolkit is the preferred open source solution for Grid software (Globus Toolkit ‘3.2 alpha’ was released on 26th November, 2003) [Globus, 03].

“I believe we

are entering a new phase of Grid computing where standards will define Grids in

the same way –- by enabling Grid systems to become easily- built commodity

systems. I'm going to call standard Grid systems ‘Third - Generation Grids,’ or

3G Grids.

First

generation ‘1G Grids’ involved local ‘Metacomputers’ with basic services such

as distributed file systems and site-wide single sign on, upon which

adventurous software developers created distributed applications with custom

communications protocols. Gigabit test beds extended 1G Grids across distance,

and attempts to create ‘Metacenters’ explored issues of inter-organizational

integration. 1G Grids were totally custom made, top to bottom – proofs of

concept.

2G Grid

systems began with projects such Condor, I-WAY (the origin of Globus) and

Legion (origin of Avaki), where underlying software services and communications

protocols -- plumbing -- could be used as a basis for developing distributed

applications and services. 2G Grids offered basic building blocks, but deployment

involved significant customization and filling in lots of gaps. Independent

deployments of 2G Grid technology today involve enough customized extensions

that interoperability is problematic, and interoperability among 2G Grid

systems is very difficult. That’s why we need 3G Grids.

The GGF

community is taking lessons learned from 1G and 2G Grids and from web services

technologies and concepts to create 3G architectures like the Open Grid

Services Architecture (OGSA), whereby a set of common interface specifications

supports the interoperability of discrete, independently developed services.

The recently released Open Grid Services Infrastructure (OGSI) service

specification is the keystone in this architecture.

By introducing

standard technical specifications, 3G Grid technology will have the potential

to allow both competition and interoperability not only among applications and

toolkits, but among implementations of key services. You know that you've got a

commodity when you can mix and match components. But this potential won't be

achieved by a better definition for ‘Grids’ and it certainly will take more

than coining a term like 3G Grids. It will only come if the community continues

to work hard at defining standards. That is what GGF is all about.”

There is general consensus that wide spread adoption of Grid

technology as a commodity is some 5-10 years away. There is a significant

opportunity over the next five years to create new applications and technology

that will facilitate the adoption of Grid as well as converting many

established technologies for optimal usage of Grid e.g. conversion of ASIC

simulations. South Yorkshire should attempt to establish international

credibility in the provision of service-based Grid applications.

Applications best suited to the GRID fall into four

classifications, as described in Table 2.2. However, a note of caution needs to

be observed. There is very little experience in the application of Grid

technology in commercial service environments i.e. where a Grid is made

available as a general resource as a commercial service. Most experience is on

using Grid to support a specific application e.g. data manipulation within a

single Enterprise.

Table 2.2 Classification of the applications of GRID.

|

Application

Classification

|

Explanation

|

Examples

|

|

Community-centric

|

GRID used to knit organizations together for

collaboration.

|

On-line Learning. Virtual Laboratory, etc.

|

|

Data-centric

|

GRID used to integrate the access and use of multiple data

and compute resources.

|

e-Science, Digital Repositories, etc.

|

|

Compute-centric

|

Processor farm capability where the ‘job’ can be submitted

in batch form.

|

Graphical render-farms, molecular analysis, etc.

|

|

Interaction-centric

|

Support for real-time response such that interactive

computing is possible.

|

Simulation and visualization, and Metacomputing i.e.

virtual desk-top supercomputer.

|

Recent development in or understanding of Grid technology has introduced two concepts:

- Access Grids – this is the collection of resources that support human collaboration across the Grid, including large-scale distributed meetings and training. They are typically dedicated facilities that explicitly contain the high quality audio and video technology necessary to provide an effective user experience;

- Utility computing – Grid computing is predominantly associated with the usage of large powerful computer facilities in very locally distributed systems e.g. in the same computing facility. Utility computing is used to refer to highly distributed systems in which many hundreds and thousands of processors, distributed over hundreds and thousands of square kilometres, are harnessed to achieve a particular processing objective. The SETI@Home project is a classical example of Utility Computing.

3.1 Service Provision

The following set of services should be made available through the high performance data centre (these are a reflection of the services described in Tables 2.1 and 2.2):

- Application-centric – application service provision in which applications are made available through remote access. This service enables an organization to obtain cost effective access to applications that would be too expensive or complex to mount and maintain locally;

- Storage-centric – the reliable long-term digital data archiving. This could be used as a remote back-up for important digital repositories. The key property is that this is an exceptionally reliable storage facility and which a user can easily access to recover any data lost at their primary site(s);

- Access-centric – basic access to a wide range of data networks and services. This would include support for web and email servers, IP telephony, videoconferencing, etc. through as many of the available service providers as possible e.g. BT, Torch, Energis, etc;

- Community-centric – the provision of managed services that support a particular community of use. This will include a wide range of eCommerce and collaborative facilities that will enable small and micro companies to cost effectively make their commercial products available through the Internet;

- Data-centric – the provision of a reliable digital data archive for which a series of data-access facilities are made available. This differs from the ‘storage-centric’ approach by making available a set of data management services e.g. intelligent search and retrieval, data mining capabilities, etc.

- Compute-centric – the provision of a powerful raw computing capability. This service provides a user with an on-demand, cost-effective powerful processing facility for a limited duration (this would be particularly useful for organizations that have their own Grid facilities but would like to have access to extra external capacity). This differs from the application-centric category because the application itself is supplied to run on the processor farm i.e. the user will have created or supplied the application;

- Interaction-centric – real-time service interaction that enables users to interact with the remotely available services. This would include support for ‘fast-twitch’ on-line gaming as well as data-set visualization.

3.2 Capabilities & Usage

The set of services available mean that the high performance data centre can be used to support:

- System simulation and modelling – managed service access to specialist applications that will enable different sectors of science and engineering to model and analyze the design and capabilities of new artefacts. The supplied applications can be based upon generic techniques, such as computational fluid dynamics that are tailored for specific domains, or specialist applications already designed for a specific domain purpose. Application domains include geographic information systems, aerospace and mechanical engineering, biological systems modelling, electronic systems modelling, etc;

- Data visualization – managed service access for the visualization and real-time manipulation of large sets of data. This can be used to support the system simulation and modelling activities but should also be available as an independent service;

- Data mining and archiving – the ability to search and analyse the data sets within the data centre. This is a service available for the open data sets or data that is owned by the organization executing the data operations. It is important that the data is stored in ‘analysis friendly’ formats e.g. using the appropriate mark-up language;

- Digital rights management capable digital repository – the provision of services that enable digital content to be made available through a variety of electronic media. This service includes the usage of state-of-the-art digital rights management thereby enabling an organization to mounts its content electronically and to control how this material may be accessed and the charge for that access. It is important that this service is well suited to the type of material created by the creative content hub participants;

- Generic e-commerce managed service – there is increasing reliance upon Internet-based sales for digital products. This generic service should enable an organization to have its material made available for electronic download using a range of standard e-commerce services. This includes support for on-line credit transactions;

- Application service provision – the provision of applications through a remote service façade. These applications can either be made available through batched job submissions or as interactive services. The former could involve processor intensive services whereas the latter is dependent upon regular interaction with the user e.g. word-processing. This service will enable an organization to cost effectively use services that would otherwise be too expensive to support e.g. due to licensing costs, etc.

- Learning management system (LMS) service – the provision of a secure LMS that can be accessed from anywhere and at anytime (this is a type of application service provision). This LMS service should be available in many different ways depending on the nature of the clients e.g. for schools, colleges, universities, corporates, etc. The LMS should enable the client to be able to control the way in which learning material is made available;

- Internet service provision – this is the standard ISP provision. This should not be supplied as a direct service in its own right to the client base i.e. clients using the HPDC could create such a service but the HPDC will not supply this itself. This ensures that the HPDC does not create direct competition to other ISPs located in the region.

3.3 Target User Communities

The target user communities can be categorised as: Public Sector, Private Sector, within the Sub-region and outside the Sub-region. Clearly, whatever the provision is within the Sub-region can also be made available outside of the Sub-region, albeit using a different funding model. Therefore, we will focus on the user communities within the sub-region, and in particular those identified in the Sub-region’s Cluster Plan [Objective1, 02], [SYForum, 01], [Yforward, 01] (the high performance data centre should be perceived as a key cross-cluster activity), namely:

- Public Sector

- Universities – it is not cost effective for most Universities to maintain their own Grid capability. An on-demand Grid service arrangement would be ideal;

- Colleges – the colleges could benefit from a combination of the services available to the University (research-oriented) and school (teaching-oriented) sectors;

- Schools – the SYeLP would benefit from having access to a local data centre. This provision could also be augmented to support novel teaching applications that would usually have processing demand snot available to a school;

- Hospitals – there are several hospitals in the sub-region that have extensive data storage and management requirements. Of particular concern to this community will be the security of the data;

- Government – the Local Councils, the Department for Education & Skills (DfES), the Department for Works & Pensions (DWP), etc. with access to reliable digital archive facilities;

- Private Sector

- Advanced Manufacturing & Metals – provision of extensive modelling, simulation and visualization applications is of particular interest to this sector e.g. for the design of aircraft parts, more efficient steel manufacturing, etc. This will require the provision of a range of specialised applications that are specific to particular sectors;

- Bioscience – this sector has extensive need for computer-based processing to support many different aspects e.g. molecular modelling, genetic modelling, biological systems modelling, etc. The services available should be based on those required by the new start-ups being created by the White Rose Universities;

- Creative & Digital Industries – provision of the digital rights managed digital repository for selling locally created content; computing support for the Knowledge & Learning Institute (KLI), the Design Centre and the Creative & Cultural Hub (CCH); render farm for the generation of digital images, videos, DVDs, etc; and general e-commerce facilities;

- Environmental & Energy Technologies – provision of extensive modelling, simulation and visualization applications is of particular interest to this sector e.g. for the design of energy efficient buildings.

3.4 Physical Realisation

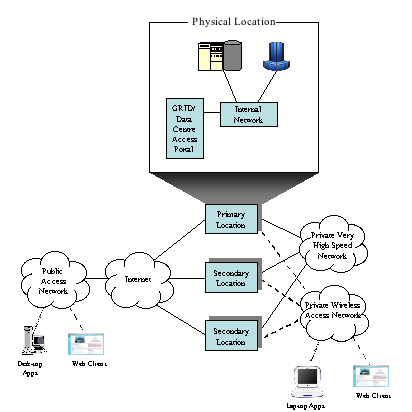

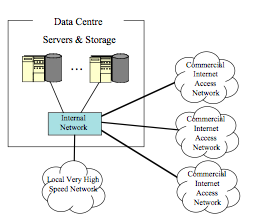

A schematic representation of the physical architecture of the HPDC is shown in Figure 3.1.

Figure 3.1 The physical architecture for the high performance data centre.

The salient features of this architecture are:

- The virtual HPDC consists of three core physical sites, one primary and two secondary. These three sites are linked using a very high-speed network (1-10Gbps). Each site has a full service capability and has data storage replication of the other sites. The difference between the primary and secondary sites is in the available Grid processing power and that only the primary site has resident support staff. The secondary sites are fully automated environments;

- Each site has its own data storage and processor farm. When all three sites are operational, a service request would be supported by one or more sites. The sites operate as an integrated virtual service environment. The system should withstand the total failure of two of the sites and each site should have redundant external service provision and failure recovery capability. A non-volatile storage archive facility will also be maintained at the three sites;

- Service access will be via the ‘Internet’, the private very high-speed network and the private wireless access network. The wireless access network will provide direct access to the service and will be available throughout the South Yorkshire sub-region. The wireless access will provide any-where any-time access to the HPDC services. Wireless support is important to enable ease of access without requiring cable-based connection to the service but it must be stressed that wireless is only one form of access to the system. Access to the HPDC will also be available as part of a Virtual Private Network (VPN) service that will enable organizations to make the HPDC a part of their VPN;

- The private very high-speed network will also support interconnection with other Grid nodes throughout the region, UK and the rest of the world. In particular it will enable interconnection with the White Rose Grid plus other commercial Grid nodes within the region;

- Primary access to the service will be through the ‘Internet’ portal. This portal enables users to access the service through whatever local access network is available. This is also the access point for the HPDC services to the global Internet. Client access to the portal will be supported through a standard web browser or by web-services enabled service specific application clients;

- When required it will be possible to create furthers secondary sites. This will increase the Grid processing capability.

3.4.1 Utility Computing

The architecture shown in Figure 3.1 is a typical Grid approach. There are many under utilised computing facilities around the sub-region e.g. many computer systems are idle outside of the normal working hours. Using the HPDC as a system management centre it is also possible to construct a Utility Computing facility. This is possible by establishing commercial service contracts so that computing facilities at external sites can be used at certain times of the day. The only constraints are that the computers are on a network that is accessible to the HPDC and that it is possible to cost effective use these remote computer systems.

3.5 Technological and Commercial Implications

If the HPDC is established then there are several technological and commercial implications for the sub-region:

a) The licensing arrangements for many well established software products are such that it is too expensive to use them in Grid systems. This is intentional by the vendors to optimise their revenue streams. This provides an excellent opportunity to create Grid-enabled equivalents that are made available using very different licensing arrangements. This creates a classic ‘disruptive technology’ development opportunity as described in the ‘Innovator’s Dilemma’ [Christensen, 00]. The issue is of deciding which software should be developed;

b) The creative and digital industries consist of many micro organizations. In many cases these companies cannot afford to create expensive computing facilities but the nature of their business means that ay competitive edge requires the usage of such facilities. The service and price models should be designed to enable these organizations to provide their services at globally competitive rates;

c) The classic trade-off in traditional software is that of memory versus processor power. Large memory usage can be used to compensate for slow processors and vice versa. Grid provides an opportunity to optimise both dimensions. Moore’s Law [Moore, 65] provides a further advantage such that over the next five years we can expect an eight-fold increase in processing speed coupled with an eight-fold decrease in costs i.e. a factor of 64 improvement in the processing/cost ratio. This will allow even the most brute-force software solutions to become cost effective and useful;

d) The success or otherwise of Grid-based services will be determined by the cost and pricing models. The sub-region should not focus on establishing the perfect set of models. Instead, the focus should be on establishing commercial services using a pricing model that is attractive to the target user community. First, the market should be created. Second, competition should be limited by reducing the service price while maintaining a high quality of service.

4.1 Partners & Partnership

The High Performance Data Centre must be established through collaboration between the local funding agencies, the public sector and the industrial community. The key players and other sub-regional activities are:

a) White Rose Grid – the Leeds, Sheffield and York Universities consortium that is developing a research-oriented Grid facility. The HPDC could become the fourth node. Either the University of Sheffield or Sheffield Hallam University could be the location for the secondary sites;

b) eCampus – the creation of a new technology park which will be focused on housing new technology ventures. eCampus is a strong location for the location of the primary site;

c) KLI & CCH – both of these new centres will require computing facilities. The KLI and CCH could be co-located with the HPDC. If either centre is not located in the eCampus then they could also be hosts for the secondary sites;

d) Software Factory – the University of Sheffield is looking at commercially exploiting the software that has been developed internal to the University as part of its research activities. It will be possible to develop Grid-enabled versions of this software;

e) Advanced Materials Research Park – the science and engineering activities undertaken will require the usage of extensive computing facilities. The HPDC would be an ideal service provision for the research park;

f) Industrial (local) – there are many potential users or organizations that would provide services through the HPDC e.g. Ufi, Fluent, Jennic, OCF, HSBC, etc. These organizations should be approached to establish support and collaborative ventures. It could be possible for one of these companies to host a secondary site;

g) Industrial (non-local) – vendors such as SUN Microsystems, IBM and HP will certainly wish to participate in the creation of the HPDC. Significantly discounted hardware should be available from these companies;

h) Further Education colleges – Doncaster College is developing an ‘Access Grid’ which organizations in the US to provide a distributed orchestra. This would provide an opportunity to investigate commercial possibilities for such a service.

4.1.1 White Rose Grid

The successful development of the HPDC within South Yorkshire does not depend on the usage of the White Rose Grid. It is important that an alternative to the White Rose Grid is developed because:

- The White Rose Grid will always be heavily used by the host University research departments. It is very unlikely that there will be enough spare capacity to mount a viable commercial service;

- It is unlikely that the White Rose Grid will be sustainable by the host Universities without significant Government funding. It is also unlikely that Government funding will be sustained at the required level after the next five years. Therefore, those Universities will need access to an alternative solution;

- While the White Rose Grid is actively seeking commercial collaborations this are primarily oriented to supporting the research interests of the host Universities. In general such collaboration are only sustainable with large and medium sized companies. Support for small and micro size organizations needs to come from elsewhere.

4.2 Timeline & Delivery

A proposed time-line for the establishment of the HPDC is given in Table 4.1.

Table 4.1 Proposed development time-line for the provision of the HPDC.

|

Id

|

Activity

|

Completion Date

|

|

1.

|

|

Jul 2004

|

|

2.

|

Funding Agency Authorisation for HPDC Establishment

|

Oct 2004

|

|

3.

|

Detailed Design of the HPDC

|

Feb 2005

|

|

4.

|

Service Provision from the HPDC Secondary Sites

|

June 2005

|

|

5.

|

Completed Construction of the HPDC Primary Site

|

June 2006

|

|

6.

|

Full Service Provision

|

Sept 2006

|

This time-line is adventurous and it is more realistic to expect the end date to be Sept 2007 i.e. twelve months later than depicted in Table 4.1. The intermediate dates would need to be adjusted appropriately.

4.3 Outline Costs & Potential Match Funding

4.3.1 Costs

Outline costs are shown in Table 4.2 (year 1would be assumed to begin in Jan 2005). The cost categories include:

- Build – only the primary site requires a new build. It is assumed that the two secondary sites would be located in established computer centres;

- Capital – the costs of installing the full hardware and software system, the service applications plus the leasing of the core telecommunications infrastructure and the building of the wireless access network. This costs assumes a five year replacement cycle (this may need to be adjusted to a three year cycle to maintain a commercially competitive service);

- Recurrent/per annum – the recurrent costs over a three-year period. This cost includes the telecommunications infrastructure and access network usage cost;

- Staffing/per annum – the staffing costs for supporting the three sites.

Table 4.2 Outline costs for the provision of the high performance data centre.

The £14.5 million total should be considered to be the lowest amount required to provide an appropriate comprehensive service. A more realistic total should be £16-18 million depending on the processing power to be supplied and the full telecommunications costs. Once fully established the annual costs will be in the order of £3 million.

4.3.2 Source & Match Funding

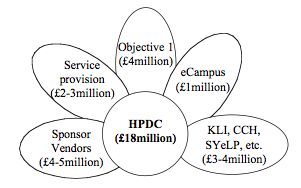

The potential sources of matched funding are:

- Hardware vendors such as SUN Microsystems should be expected to provide at least 40% discount on the commercial costs for their hardware and software. This would provide matching of £2million for the initial capital investment and £400k per annum for the upgrades. This would create matching of £2.8million over the three year period;

- Organizations such as the Ufi have a significant requirement for data centre support. It should be possible to establish a commercial service income once the secondary sites are operational. This may provide a revenue stream of £1million over the three years;

- Collaborative research opportunities with the Universities to draw down funding from PPARC, EPSRC and OST could provide a further £1million over the three years. Collaborative bids with the White Rose GRID should be undertaken;

- It should be possible to use the following initiatives to offset this investment (these are initiatives that would be expected to make significant usage of a high performance data centre in their own right and so overall cost savings are possible using complementary funding allocations) to at least £2million over the three years:

o The location of the primary site on the eCampus would enable a significant proportion of the build costs to be discounted. Some specialist build costs would still need to be allocate e.g. the internal configuration;

o KLI, CCH and the Design Centre (a total funding allocation of at least £20million has been allocated for these three initiatives). At least one of these centres could be co-located with either the primary or a secondary site;

o SYeLP has committed a large amount of money for supporting remote service provision. It should be more cost effective to use the local HPDC to support some of these services.

A visualization of the potential sources of funding is shown in Figure 4.1.

Figure 4.1 Funding sources for the HPDC.

Further work is required to identify all of the sources and to ensure that these cover the full costs for the creation of the HPDC as well as covering the annual costs.

4.4 Further Work

The further work that needs to be undertaken as soon as possible includes (these are not in any particular order):

- A benefit analysis for all of the stakeholders – an investigation into the benefits that would accrue to all of the funding bodies and companies involved in the creation of the HPDC;

- Risk analysis to all of the stakeholders – an evaluation of the risks involved in the creation of the HPDC and an investigation into how these risks can be minimized;

- Review of client needs and potential market size – an analysis of the size of the potential market for the HPDC services in terms of type and range of clients;

- Technology and services definition – the high level but detailed definition of the services that are to be supplied by the HPDC. The corresponding technology required to support these services must then be detailed. The provision of both Grid and Utility Computing approaches needs to be evaluated;

- Commercial model – the development of the commercial model that will be required to sustain the HPDC for a period of ten years. This includes the development of the full revenue and cost analysis and must include the renovation costs for the equipment base.

4.5 Next Steps and the SY-HPDC Pilot

One way forward is to establish a South Yorkshire High Performance Data Centre (SY-HPDC) Pilot. The Pilot should run for at least 12 months.

4.5.1 Aims & Objectives

It is recommended that the aim of the Pilot is to investigate the business models that are required to establish commercial sustainability. Therefore the objectives should be to:

- Create commercial services that can establish a revenue stream within the 12-month lifetime of the Pilot. This should include establishing commercial relationships with organizations such as ARM who would use the HPDC;

- Confirm the ways in which these SY-HPDC can be used to support the KLI and CCH. The KLI and CCH could be users of, and sources, of services;

- Establish the mechanisms by which collaborations with the Software Factory can be used to create novel commercially viable Grid-enabled applications.

4.5.2 Outline Specifications

The Pilot should consist of:

- A single site multi-processor farm and multi-terabyte data repository;

- A high speed Internet connection with bandwidth in the region of 100Mbps-1Gbps;

- Applications that cover the range of (this should enable several business service models to be evaluated):

- Large data storage requirements

- Heavy processing requirements

- Multi-copy software processing required by the creative and digital industries.

It should be stressed that the aim is to look at the establishment of viable commercial services.

4.5.3 Outline Funding Proposal

It is recommended that funding in the region of £1,250,000-£1,000,000 is made available for the Pilot over a period of 12 months. It should be expected that most of the matched funding is supplied by the hardware and systems software suppliers. Matched funding should be in the region of £500,000-£350,000.

|

|

GRID Computing: Making the Global Infrastructure a Reality, F.Berman, G.C.Fox and A.J.G.Hey, Wiley, 2003, ISBN 0-470-85319-0.

|

|

|

The Innovator’s Dilemma, C.M.Christensen, Harper Business, 2000, ISBN 0-06-662069-4.

|

|

|

The New Data Center: A Quiet Revolution, An Immense Opportunity, John Gallant, Network World, February 2003.

|

|

|

Global GRID Forum web-site: http://www.ggf.org.

|

|

|

|

|

|

|

|

|

A Prospectus for cluster development in South Yorkshire: a catalyst for change, Objective 1 South Yorkshire, July 2002.

|

|

|

SYeLP Programme Brief, Dunelm Services Limited, January 2000.

|

|

|

SYeLP Pilot Project ITT/RFP Deliverables, Dunelm Services Limited, April 2000.

|

|

|

South Yorkshire Action Plan, South Yorkshire Forum, November 2001.

|

|

|

Regional Action Plan for the Yorkshire and Humber Economy, Yorkshire Forward, November 2001.

|